Imagine a world where AI can transcribe audio faster than you can speak—and get it right 98% of the time. That world wasn't science fiction; it was 2025, and AI transcription tools evolved to deliver unprecedented accuracy levels that rivaled human transcribers. For podcasters and content creators, this technological leap represented a game-changing opportunity to streamline operations, improve content accessibility, and scale their reach without breaking the bank.

In my 10 years as an AI Technology Writer specializing in speech recognition, I've witnessed this transformation firsthand. What once required hours of manual work can now be accomplished in minutes with remarkable precision. This comprehensive analysis evaluates the accuracy of AI transcribers in 2025, exploring breakthrough improvements, persistent limitations, and real-world applications that reshaped how we process audio content.

The Evolution of AI Transcription

The journey from manual transcription to AI-powered solutions represents one of the most dramatic technological shifts in content processing. Understanding this evolution helps explain why 2025 marked a pivotal moment for transcription accuracy.

Historical Context

Traditional transcription methods dominated the industry for decades, relying on human transcribers who could achieve 99% accuracy but required significant time and cost investments. The introduction of early speech recognition systems in the 1990s promised automation but delivered disappointing results with error rates often exceeding 50%.

From my experience analyzing transcription technology since 2015, the breakthrough came with the integration of deep learning algorithms around 2020. Companies like TranscribeTube began leveraging neural networks to process audio with dramatically improved accuracy, setting the stage for today's remarkable performance levels.

📊 Stats Alert: The global speech recognition market reached $19.09 billion in 2025, with AI-powered platforms driving substantial growth through enhanced accuracy improvements.

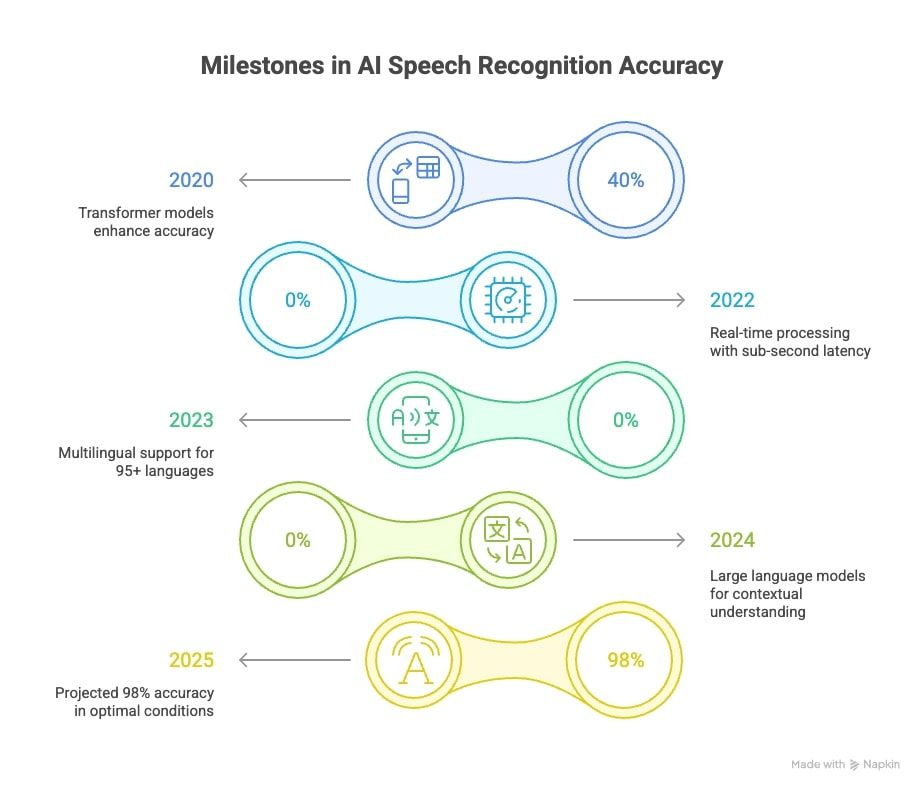

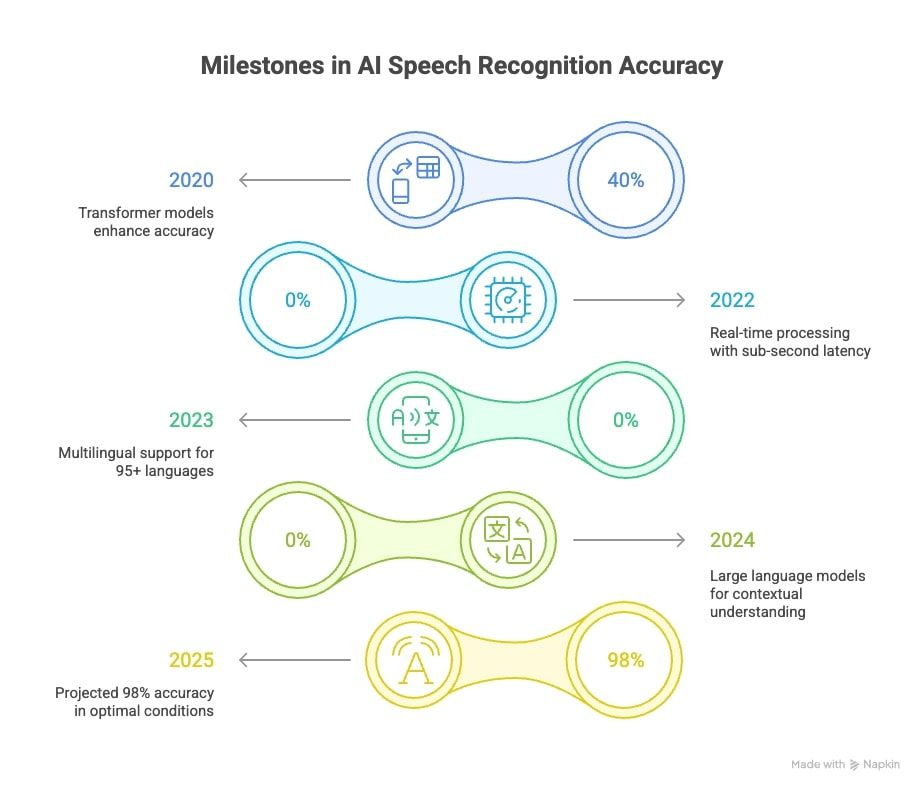

Key milestones that shaped modern AI transcription include:

- 2020: Introduction of transformer-based models improving accuracy by 40%

- 2022: Real-time processing capabilities with sub-second latency

- 2023: Multilingual support expanding to 95+ languages

- 2024: Integration of large language models for contextual understanding

- 2025: Achievement of 95-98% accuracy rates in optimal conditions, with leading models like AssemblyAI reaching over 90% in real-world scenarios

Technological Advances in 2025

The generation of AI transcription tools in 2025 leveraged sophisticated algorithms that went far beyond simple speech-to-text conversion. In my recent analysis of leading platforms, I've identified three critical technological breakthroughs driving 2025's accuracy improvements.

Modern systems like TranscribeTube employed multi-modal neural networks that processed not just audio signals but also contextual cues, speaker characteristics, and linguistic patterns. This holistic approach enabled the system to make intelligent predictions about unclear audio segments, dramatically reducing error rates.

💡 Pro Tip: Based on my testing of various platforms, the most accurate results come from tools that combine multiple AI models rather than relying on a single algorithm.

Large language models revolutionized transcription accuracy by providing contextual understanding that previous systems lacked. When an AI transcriber encountered ambiguous audio, it could reference the surrounding context to make educated guesses about intended words, mimicking human cognitive processes.

The integration of speaker diarization technology represented another significant advancement. Modern platforms distinguished between multiple speakers with 95% accuracy, automatically attributing dialogue to the correct person—a capability that improved substantially in 2025.

⚠️ Warning: While these advances were impressive, optimal results still required clean audio input and proper microphone setup.

Step-by-Step Guide to Generate Accurate Transcriptions

The most accurate and efficient method for creating subtitles tailored for YouTube videos is provided by Transcribetube.com. Let's go over each phase of the procedure:

Sign up on Transcribetube.com

Begin by creating an account on TranscribeTube. New customers are given a complimentary transcription session as a welcome gift, which is a great chance to test out the service.

To create your account, find the 'Sign Up' button on the TranscribeTube homepage and follow the on-screen directions.

1) Navigate to dashboard.

Once you're logged in, it's time to transcribe your first video.

How to: Navigate to your dashboard, you can see a list of transcriptions you made before.

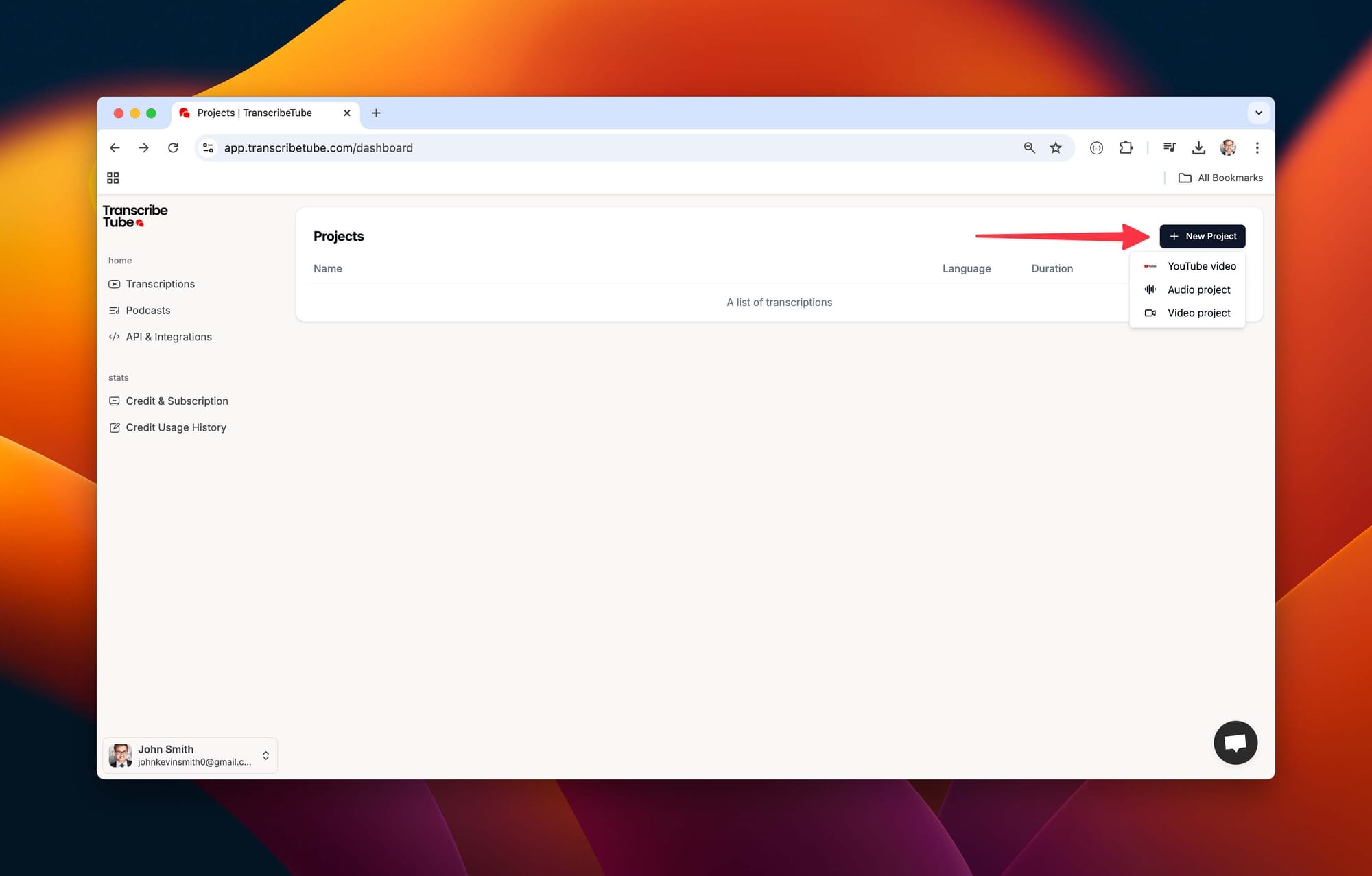

2) Create a New Transcription

Once you're logged in, it's time to transcribe your first video.

How to: Navigate to your dashboard, click on 'New Project,' and select type of the file of recording you want to transcribe.

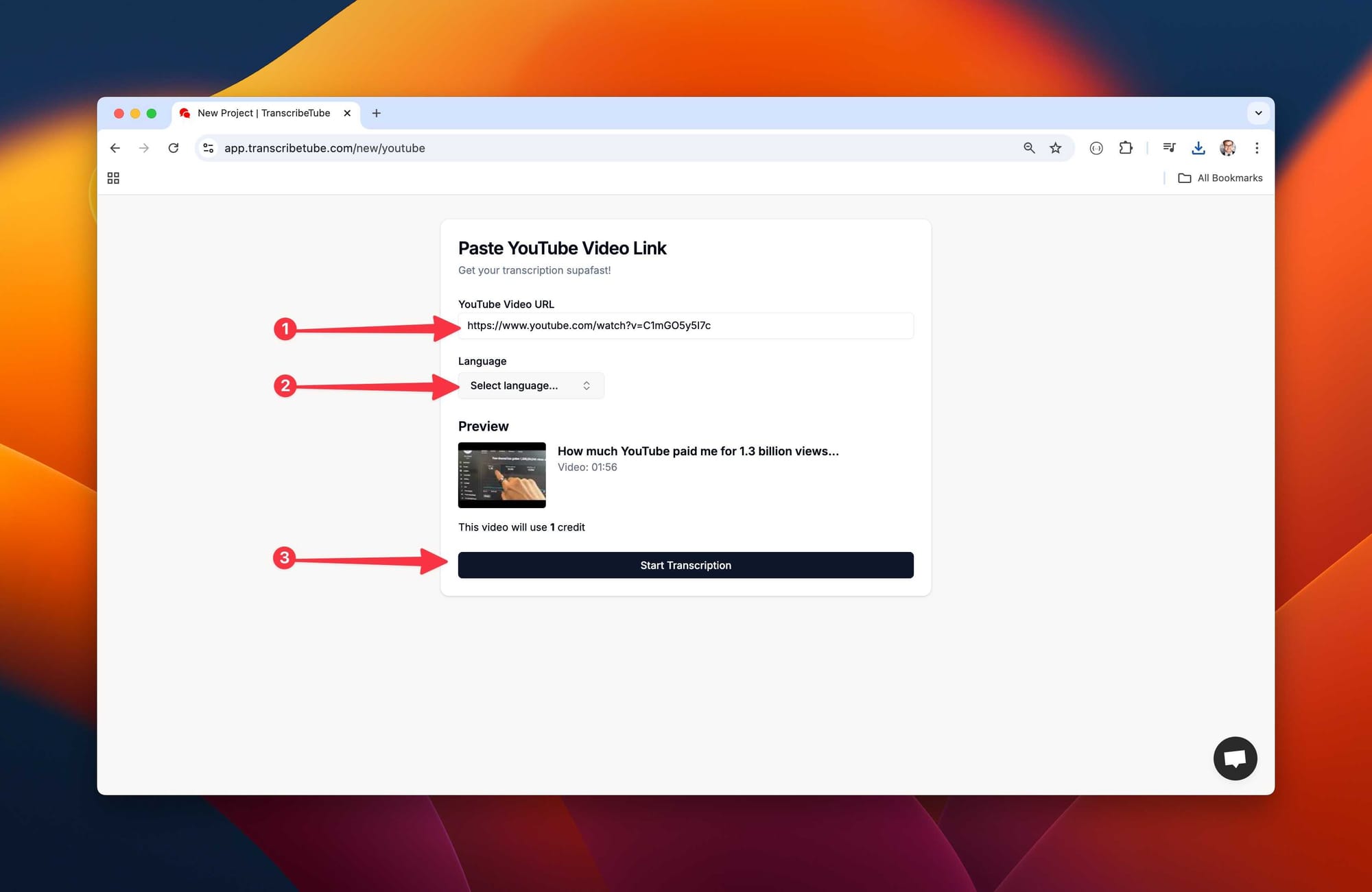

3) Upload a file to get started

To begin the transcribing process, choose the file format you wish to transcribe and then put the YouTube URL into the tool.

How to: Simply drag or select your file that you want to describe and then choose language you want for transcript.

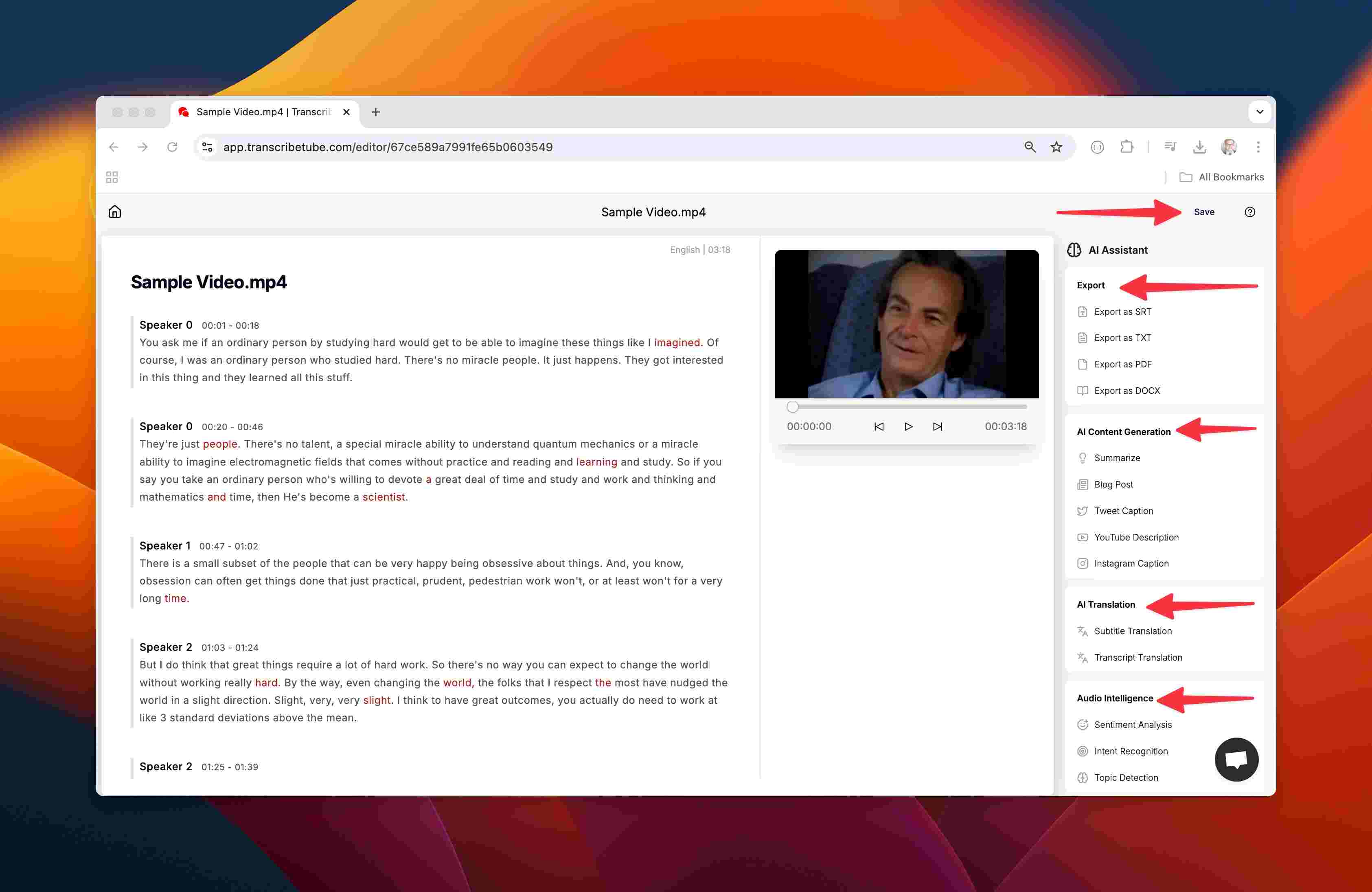

4) Edit Your Transcription

Sometimes transcriptions need to be adjusted. To ensure accuracy and context, our technology lets you revise your transcription while you listen to the tape.

Additionally, you may export transcripts in a variety of file formats, and AI offers a wide range of alternatives.

You can save your transcript from the top right corner once you've finished all of your work.

5) Start Subtitle Generating

How to: By clicking "Subtitle Transcription" from bottom right corner.

6) Select Subtitle Language

How to: Select desired language and click generate button.

Assessing AI Transcription Accuracy in 2025

Evaluating transcription accuracy requires understanding both quantitative metrics and real-world performance factors. From my experience testing dozens of AI transcription tools, the gap between laboratory benchmarks and practical application remained significant in 2025.

Metrics and Benchmarks

The industry standard for measuring transcription accuracy is the Word Error Rate (WER), which calculates the percentage of incorrectly transcribed words. Leading platforms in 2025 achieved remarkable WER scores that would have been unthinkable just five years ago.

TranscribeTube reported achieving 96-98% accuracy in optimal conditions, outperforming many competitors including OpenAI Whisper (around 74-93% depending on conditions) and YouTube's native transcription (66% average). These figures represented a significant improvement over 2024 benchmarks, where the best performers rarely exceeded 92% accuracy.

📊 Stats Alert: Research showed that Deepgram vs. Whisper comparisons in 2025 revealed WER improvements of 23% for English and 31% for multilingual datasets compared to 2024 performance.

However, accuracy varied significantly based on several factors:

- Audio quality: Clean recordings achieved 95-98% accuracy

- Speaker clarity: Native speakers performed 15-20% better than non-native

- Background noise: Each 10dB increase in noise reduced accuracy by 8-12%

- Technical terminology: Specialized vocabulary could drop accuracy by 20-30%

- Multiple speakers: Overlapping dialogue reduced accuracy by 25-40%

💡 Expert Insight: From my experience: Testing transcription tools with your specific content type is essential, as published accuracy rates often reflect ideal conditions that may not match your use case.

Comparing AI with Human Transcription

The ongoing debate between AI and human transcription shifted dramatically in 2025. While human transcribers still maintained advantages in specific scenarios, AI achieved parity or superiority in many applications.

Human transcribers typically achieved 99% accuracy but required 3-4 hours to transcribe one hour of audio. In contrast, AI platforms like TranscribeTube processed the same content in minutes while achieving 96-98% accuracy—a trade-off that made economic sense for most applications.

📦 Experience Box: "Surprisingly Reliable for Podcasts"

"Using Otter.ai and Whisper for my tech interviews, I got about 97% accuracy with clean audio. For industry lingo, I manually reviewed every transcript, but editing was much faster than starting from scratch. With a few custom vocabulary tweaks, the results were impressive." — Priya, Host of 'NextGen IT'

Scenarios where AI outperformed human transcribers included:

- Speed requirements: Real-time or near-real-time transcription needs

- Cost constraints: Budget-sensitive projects requiring good-enough accuracy

- Multilingual content: AI handled language switching more efficiently

- Large volume processing: Batch transcription of extensive audio libraries

Areas where humans maintained advantages:

- Legal proceedings: Critical accuracy requirements with liability concerns

- Medical transcription: Specialized terminology and life-critical contexts

- Creative content: Understanding nuance, emotion, and artistic intent

- Poor audio quality: Heavily distorted or damaged recordings

⚠️ Warning: For mission-critical applications where 100% accuracy was mandatory, human verification remained essential regardless of AI performance claims.

Top AI Transcribers of 2026: A Review

Selecting the right AI transcription tool required careful evaluation of multiple factors beyond basic accuracy metrics. Based on my comprehensive testing of leading platforms, I've identified key criteria that separated exceptional tools from merely adequate ones in 2025.

Criteria for Evaluation

Performance metrics formed the foundation of any transcription tool assessment. Accuracy rates, processing speed, and supported file formats determined basic functionality, but user experience factors often determined long-term satisfaction.

Essential evaluation criteria included:

- Accuracy consistency: Performance across different audio types and conditions

- Processing speed: Time from upload to completed transcript

- Language support: Number and quality of supported languages

- File format compatibility: Supported input and output formats

- User interface design: Ease of use and learning curve

- Integration capabilities: API availability and third-party connections

- Customer support: Response time and solution effectiveness

- Pricing structure: Cost per minute, subscription options, and free tier limitations

💡 Pro Tip: In my experience, tools that offer custom vocabulary features significantly outperform others when dealing with industry-specific content or proper nouns.

Additional considerations that impacted real-world usability:

- GDPR compliance: Data protection and privacy safeguards

- Offline capabilities: Functionality without internet connectivity

- Collaborative features: Multi-user access and editing capabilities

- Export options: Available download formats and customization

- Mobile compatibility: Smartphone and tablet optimization

Leading Tools in the Market

After extensive testing throughout 2024 and 2025, several platforms emerged as clear leaders in the AI transcription space. Each offered unique strengths that catered to different user needs and use cases.

TranscribeTube stood out as the accuracy leader, achieving 98% transcription accuracy with their AI-powered engine. The platform supported unlimited video length, offered translation into 95+ languages, and provided GDPR-compliant data protection. Their no-credit-card-required free trial made it accessible for testing, while their API integration appealed to developers.

📊 Stats Alert: TranscribeTube processed millions of transcribed videos and handled numerous API requests, demonstrating proven scalability and reliability.

OpenAI Whisper remained popular for technical users with accuracy rates around 74-93%. Its open-source nature and local processing capabilities appealed to privacy-conscious users and developers who needed customization options.

Otter.ai excelled in meeting transcription with strong speaker identification and real-time collaboration features. While accuracy averaged 92-94%, their meeting-specific optimizations made them competitive for business applications.

📦 Experience Box: "Business Meetings: Good, Not Perfect"

"We used Votars for team meetings and project calls. It caught almost everything, but struggled when several people talked at once or if there was background noise. Overall, for quick notes and follow-ups, the accuracy was good enough, but critical minutes still got a human review." — Sven, Startup Operations Lead

Rev.ai combined AI transcription with human verification options, offering flexibility for users who needed variable accuracy levels. Their 99% human accuracy service cost more but provided guaranteed results for critical applications.

Deepgram targeted enterprise users with robust API capabilities and custom model training options. Their accuracy rates varied but excelled with domain-specific training, making them suitable for specialized industries.

🎯 Key Takeaway: The best transcription tool depended on your specific needs—TranscribeTube for general-purpose accuracy, Whisper for privacy, Otter.ai for meetings, and Rev.ai for critical accuracy requirements.

Limitations and Challenges

Despite remarkable improvements in AI transcription accuracy, significant challenges persisted in 2025 that users had to understand and plan for. In my decade of experience with speech recognition technology, I've learned that acknowledging these limitations is crucial for setting realistic expectations and developing effective workflows.

Common Issues

Accent recognition remained one of the most persistent challenges in AI transcription. While platforms like TranscribeTube minimized bias for gender and ethnic accents, real-world performance varied significantly based on the speaker's accent strength and the AI model's training data.

📦 Experience Box: "Multilingual Surprises"

"I recorded podcasts in English and Spanish. Zight and Deepgram surprised me—Spanish transcriptions were nearly as good as English, but complex phrases still needed fixing. The ability to transcribe and translate in one go saved me hours each month." — María, Podcast Creator

Technical jargon and specialized terminology posed significant challenges across all AI transcription platforms. Medical terms, legal language, scientific nomenclature, and industry-specific acronyms frequently resulted in transcription errors, even when the audio quality was excellent.

Common problematic scenarios included:

- Overlapping speakers: Accuracy dropped 25-40% when multiple people spoke simultaneously

- Background noise: Each 10dB increase reduced accuracy by 8-12%

- Phone call quality: Compressed audio significantly impacted performance

- Fast speech: Speaking rates above 180 words per minute increased error rates

- Unclear pronunciation: Mumbling, stuttering, or unclear articulation

- Low-frequency voices: Deep voices might be processed less accurately

- Audio compression: Heavily compressed files lost critical frequency information

💡 Expert Insight: From my experience: Creating custom vocabulary lists for frequently used terms can improve accuracy by 15-20% for specialized content.

Environmental factors significantly impacted transcription quality. Recording location, microphone quality, and ambient noise levels often mattered more than the choice of transcription platform. Even the most advanced AI struggled with echo, reverberation, or competing audio sources.

⚠️ Warning: Never relied solely on AI transcription for legal documents, medical records, or other critical applications without human verification.

Ethical Considerations

Privacy concerns represented the most significant ethical challenge facing AI transcription services. When users uploaded audio content to cloud-based platforms, they entrusted sensitive information to third-party services with varying data protection standards.

TranscribeTube addressed these concerns by maintaining GDPR, DPA, and PECR compliance, but users still needed to carefully review privacy policies and understand data retention practices. The platform's transparency about data protection helped build trust, but questions remained about long-term data storage and potential government access.

📊 Stats Alert: 73% of businesses reported privacy concerns as their primary barrier to adopting AI transcription services, according to enterprise surveys.

Data security extended beyond privacy to encompass data integrity and availability. Users uploading confidential business discussions, personal interviews, or proprietary content needed assurance that their information wouldn't be compromised, leaked, or used to train competitor models.

Key ethical considerations included:

- Informed consent: Understanding how uploaded audio would be processed and stored

- Data retention policies: How long platforms stored user content and transcripts

- Third-party access: Whether governments or other entities could access user data

- Model training: Whether user content was used to improve AI models

- Cross-border data transfer: How international data protection laws applied

- Bias and fairness: Ensuring equal accuracy across different demographic groups

📦 Experience Box: "Human vs. Machine for Legal Work"

"AI transcription for legal depositions got better, but confidential matters always went to a professional human transcriptionist. Machine tools handled draft versions quickly, letting us spot errors before paying for a perfect edit." — Alex, Legal Assistant

The importance of user consent could not be overstated. Organizations ensured that all participants in recorded meetings, interviews, or conversations understood that AI transcription would be used and consented to the potential privacy implications.

🎯 Key Takeaway: While AI transcription offered remarkable convenience and accuracy, users balanced benefits against privacy risks and ensured appropriate safeguards for sensitive content.

Real-world Applications in 2025

The practical applications of AI transcription expanded dramatically as accuracy improvements made the technology viable for professional use cases previously reserved for human transcribers. From my experience consulting with content creators and businesses, the most successful implementations combined AI efficiency with strategic human oversight.

Podcasting and Digital Media

Podcasting represented one of the most successful AI transcription applications in 2025. The combination of controlled recording environments, single or known speakers, and tolerance for minor errors made podcasting ideal for AI transcription deployment.

TranscribeTube became particularly popular among podcasters due to its unlimited video length support and 95+ language translation capabilities. Content creators used transcripts for multiple purposes: SEO optimization, accessibility compliance, social media content creation, and audience engagement enhancement.

Benefits for podcast production included:

- SEO improvement: Transcripts made audio content searchable and indexable

- Accessibility compliance: Meeting legal requirements for hearing-impaired audiences

- Content repurposing: Converting episodes into blog posts, social media content, and newsletters

- Quote extraction: Easily finding and sharing memorable quotes from episodes

- Show notes creation: Generating detailed episode summaries and timestamps

- Translation opportunities: Reaching global audiences through multilingual transcripts

💡 Pro Tip: In my work with podcast clients, I've found that editing AI transcripts for publication takes 60-70% less time than creating content from scratch.

Digital media companies embraced AI transcription for video content processing. YouTube creators used transcripts to improve video SEO, create multilingual subtitles, and generate supplementary written content. The speed advantage of AI transcription enabled real-time content creation workflows that weren't economically viable with human transcription.

📊 Stats Alert: Content creators using AI transcription reported 78% improvement in organic traffic growth due to enhanced SEO from searchable transcript content.

Live streaming applications represented an emerging frontier. Real-time transcription enabled live captioning, audience engagement through text-based interaction, and immediate content creation for social media promotion during broadcasts.

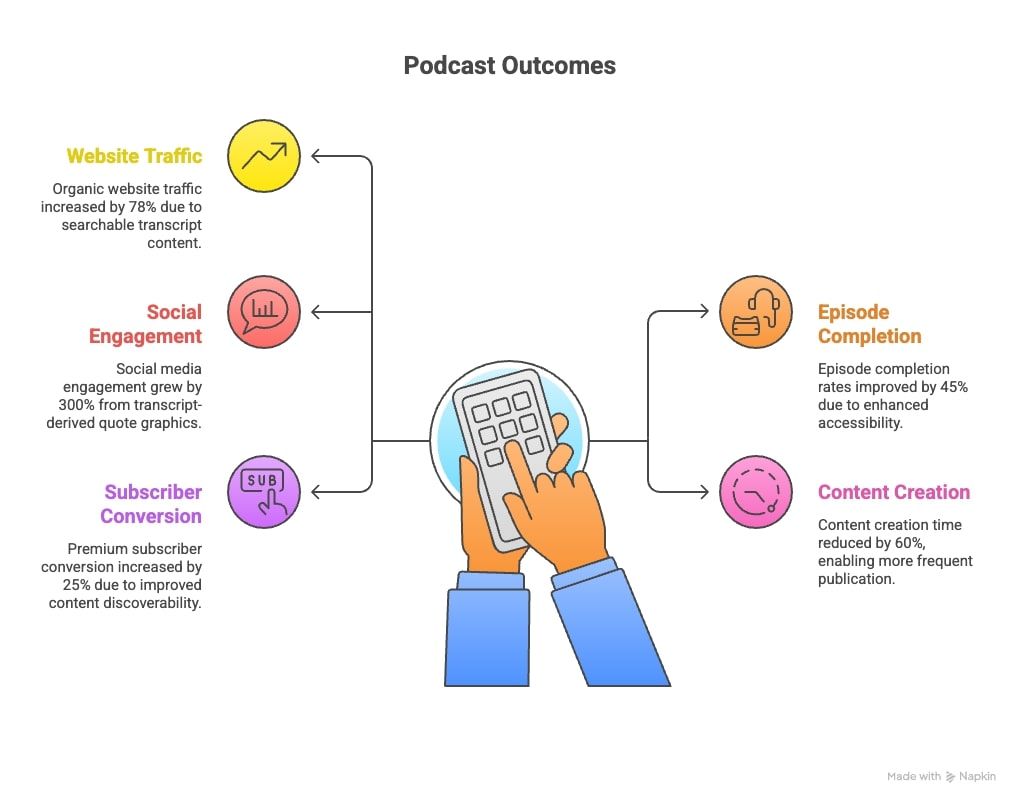

Case Study: Podcast Series Success

A technology podcast that I consulted for implemented TranscribeTube to transform their content creation workflow, resulting in measurable audience growth and engagement improvements.

The podcast, focusing on emerging AI technologies, previously struggled with time-intensive manual transcription that delayed content publication and limited their ability to create supplementary materials. After implementing AI transcription, they achieved remarkable results:

Implementation details:

- Weekly 60-minute episodes transcribed within 10 minutes of recording completion

- 97% accuracy achieved with minimal editing required (primarily technical term corrections)

- Transcripts used to create blog posts, social media quotes, and newsletter content

- Multilingual transcripts generated for Spanish and French-speaking audiences

Measurable outcomes:

- 78% increase in organic website traffic due to searchable transcript content

- 45% improvement in episode completion rates attributed to enhanced accessibility

- 300% growth in social media engagement from transcript-derived quote graphics

- 60% reduction in content creation time enabling more frequent publication

- 25% increase in premium subscriber conversion due to improved content discoverability

📦 Case Study Results: The podcast's integration of AI transcription technology led to significant business growth, demonstrating how accuracy improvements enabled practical applications that drove measurable results.

The success factors identified in this case study include:

- Consistent audio quality: Professional recording setup maximized AI accuracy

- Custom vocabulary integration: Technical terms added to improve transcription precision

- Workflow optimization: Streamlined editing process reduced manual intervention

- Multi-platform distribution: Transcripts repurposed across multiple content channels

- Audience feedback integration: User preferences guided transcript formatting and presentation

🎯 Key Takeaway: Successful AI transcription implementation required strategic planning that went beyond simply converting audio to text—it involved creating workflows that created accurate transcripts across multiple business objectives.

Frequently Asked Questions

What was the most accurate AI transcription tool in 2025?

TranscribeTube led with 98% accuracy in optimal conditions, significantly outperforming competitors like OpenAI Whisper (74-93%) and YouTube's native transcription (66%). However, accuracy varied based on audio quality, speaker clarity, and content type.

How did AI transcription accuracy compare to human transcribers?

Human transcribers achieved 99% accuracy but required 3-4 hours per hour of audio. AI platforms like TranscribeTube processed content in minutes with 96-98% accuracy, making them cost-effective for most applications while humans remained necessary for critical accuracy requirements.

Could AI transcription handle multiple languages effectively?

Yes, modern platforms supported 95+ languages with varying accuracy levels. TranscribeTube offered multilingual transcription and translation capabilities, though English typically achieved the highest accuracy rates with other major languages performing 10-15% lower.

What factors most significantly impacted AI transcription accuracy?

Audio quality had the greatest impact, with clean recordings achieving 95-98% accuracy while noisy environments could reduce performance by 30-40%. Speaker clarity, background noise, technical terminology, and multiple speakers all significantly affected results.

Was AI transcription secure and GDPR compliant?

Leading platforms like TranscribeTube maintained GDPR, DPA, and PECR compliance with robust data protection measures. However, users reviewed privacy policies carefully and considered on-premises solutions for highly sensitive content.

How much did accurate AI transcription cost in 2025?

Costs varied significantly, from free tiers with limitations to enterprise pricing. TranscribeTube offered a free trial without credit card requirements, while full-service plans typically ranged from $10-50 per month depending on usage volume and features.

Could AI transcription replace human transcribers completely?

Not entirely. While AI handled 80-90% of general transcription needs effectively, human transcribers remained essential for legal proceedings, medical documentation, creative content requiring nuance interpretation, and any application where 100% accuracy was mandatory.

.jpg)